What is a robot.txt and why is it important for your website?

.avif)

One of the essential components in technical SEO is robots.txt, a powerful file that guides search engines in reading and indexing a website.

What exactly is this file and why is it so important? Join us on this journey to find out.

What is a robots.txt?

The robots.txt is a plain text file used to tell search engine bots which pages they can crawl and which they can't. This file plays a fundamental role in managing web crawling, helping webmasters to control the visibility of their content in search engines.

How to see if I have a robots.txt file?

Checking if your website has a robots.txt file is a simple process. To do so, simply open your web browser and type the URL of your domain followed by "/robots.txt”.

For example, if your domain is www.example.com, you must enter www.example.com/robots.txt in the address bar and press Enter. If the file exists, the browser will display its contents, allowing you to see the established rules. If it doesn't exist, you'll see a 404 error message, stating that the file hasn't been found.

You can use tools such as Google Search Console to verify that the file is being correctly read by the Google bot, as well as view the history of robots.txt files on your website.

How does a robots.txt work?

The structure of a robots.txt file is quite simple and linear: once you understand the basic rules and instructions, it will be easy to read and create a properly functioning robot.txt file.

You should keep a few considerations in mind in your robots.txt:

· Always after the syntax you must add a colon (:)

· The rules of robots.txt are case sensitive

· Do not block specific pages with this rule, its use is more aimed at blocking access to subdirectories with several pages

The syntax of robot.txt is as follows:

- User agent

- · Disallow

- · Allow

- · Sitemap

Let's see them in detail.

User agent

User agent specifies which search engine or robot the rules apply to. Each search engine has its own name, but if you don't specify one and you enter *, you understand that the rules apply to all bots.

Use:

- User agent: * is used to apply the rules to all search engines.

- It is possible to specify different rules for different search engines.

Disallow command

Disallow indicates which parts of the website should not be crawled by the search engines specified in the User-agent directive.

Use:

- Each Disallow line must be followed by the relative path that you want to block.

- If you want to block the entire website, you use a single forward slash (/)

- It is used to block subdirectories, or more colloquially folders, specific or everything that comes out of certain folders

Allow command

Allow specifies what parts of the website can be crawled by search engines, even if a broader Disallow rule might imply the opposite. It is useful in complex combinations where we want to track a subfolder of another previously locked one.

Use:

- Each Allow line must be followed by the relative access path that you want to allow.

- It is not necessary to add this policy for each folder on the website, it is only recommended when you have to specify a folder that could be blocked by another rule.

Sitemap command

Sitemap provides the location of the different XML sitemap files on the website. This file helps search engines find all the URLs of the site that should be crawled and indexed, that is, all the URLs of sites that respond with a code of 200 and have the index tag.

Use:

- The Sitemap directive must be followed by the absolute URL of the sitemap file.

- You can add the different sitemap.xml that the website has (language versions, images, documents, etc.)

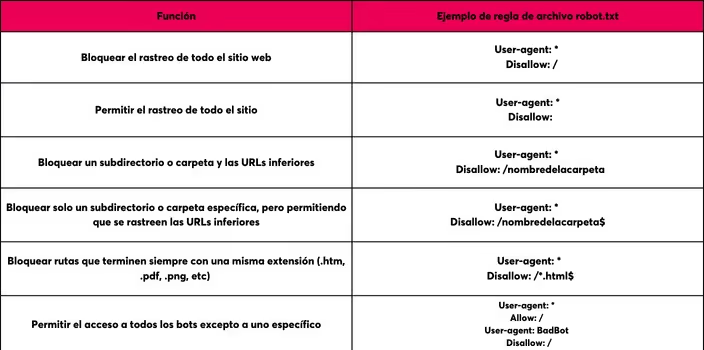

Practical examples of using robot.txt rules

Talking about the bot and its rules can seem very abstract, so we have created a table with some concrete examples:

How to create a archivo.txt?

To create a robots.txt file and configure it correctly on your website, follow these simple steps:

1. Write the rules in a text editor: To write the rules, use any simple text editor (making sure it's in plain text mode) and define the rules using the `User-Agent`, `Disallow`, `Allow` and `Sitemap` directives.

2. Upload the file to the root of the domain: Save the file with the name `robots.txt` and upload it to the root of your domain.

3. Verify and test: Access `http://www.tusitio.com/robots.txt` from your browser to make sure it's accessible. Use tools such as Google Search Console to test and validate the file's configuration, ensuring that search engines interpret it correctly.

Why robots.txt is important for your website's SEO

Using the robots.txt file offers multiple advantages for managing and optimizing a website. The following are some of the most significant benefits that can be obtained by properly implementing this file.

Optimizing tracking

The robots.txt file plays a fundamental role in optimizing the crawling of a website by search engines. When specifying which pages or files should be crawled and which should be ignored, this file guides search robots to focus on the most relevant content. For example, you can prevent sensitive pages for your company, pages generated automatically by the CMS, or files that provide no value to the user from being tracked.

In doing so, search engines can use the resources they have for each site more efficiently, spending more time crawling and indexing the pages that really matter. Not only does this improve your site's coverage in search results, but it can also speed up the indexing of new content, ensuring that the most important pages are updated more quickly in search engine indexes.

Improving site performance

Proper use of the robots.txt file also contributes significantly to the improved website performance, especially if they are websites with many levels of pages. When robots try to crawl the pages of a site without restrictions, they can consume a lot of server resources. This can lead to, in extreme cases, the website crashing due to server overload.

By limiting search bots' access to only those pages that you really need to be read and indexed, You reduce the number of requests your server must handle. Not only does this free up resources for human visitors, improving their browsing experience, but it can also result in a lower operating cost if you're using a server with limited resources or a hosting plan that charges based on bandwidth usage.

Sensitive content protection

Protecting sensitive content is another of the crucial functions of the robots.txt file. On many websites, there are pages and files that contain private or confidential information that should not be publicly accessible through search engines. These can include login pages, administrative directories, files with internal company information, or even content under development that is not yet ready to be released to the public.

By specifying in the robots.txt file that these elements should not be tracked, an additional layer of security is added. Although it's not a foolproof security measure (since robots.txt files are public and can be read by anyone), it's an important first step in preventing this information from appearing in search results. For more robust protection, these sensitive files and pages too they must be protected by credentials and a login.

Best practices for optimizing a robots.txt

- Regular update: It is important to keep the robots.txt file updated to reflect any changes in the structure and content of the website.

- Verification and testing: Use tools such as Google Search Console to test and verify the effectiveness of the robots.txt file. This ensures that the established rules are applied correctly.

Common Mistakes and How to Avoid Them

The robots.txt file can be a source of errors that negatively impact the SEO and functionality of a website. Knowing these common errors and how to avoid them is crucial to ensure that your website is properly indexed and accessible to search engines.

Robots.txt not in the root of the domain

Bots can only discover the file if it is at the root of the domain. For that reason, between the .com domain (or equivalent) of your website and the file name 'robots.txt' in the URL of your robots.txt file, there should be only a forward slash (/), for example:

www.esunexample.com/robots.txt

If there is a subfolder (www.esunexample.com/en-es/robots.txt), your robots.txt file will not be properly visible to search robots and your website may behave as if it didn't have a robots.txt file. To fix this problem, move your robots.txt file to the root of the domain.

Also, if you work with subdomains, it's important to consider that you must create a robots.txt file for each of them, different from the main domain.

Syntax errors and important content blocking

A small error in the robots.txt file can result in important pages being blocked. For example, adding an extra slash could prevent the entire site from being crawled or prevent certain crucial pages from being crawled, meaning that they are not indexed by search engines. This could decrease organic traffic to those pages and negatively affect your site's overall performance in terms of visibility and positioning in search results.

It is crucial to carefully review and verify the robots.txt file to ensure that it does not contain errors that could limit search engine access to important areas of your website.

Update failed

Not keeping the file up to date can lead to unwanted pages being indexed or important pages being left out of the search engine index.

How Novicell can help you with the robots.txt file

At Novicell we can advise you on the creation and configuration of your robots.txt file and on many other aspects of Technical SEO. Our experts will evaluate your website to define the most appropriate rules, ensuring that search engines crawl only relevant content, thus improving the visibility and performance of your site.

In addition, at Novicell we carry out continuous monitoring and strategic adjustments to keep your website updated, protecting your sensitive content and optimizing your online presence. ¡Contact us now!

Cómo podemos ayudarte

Consulta los servicios con los que te ayudaremos a conseguir tus objetivos digitales.